Software Engineering Methodologies

Dynamic Verification

Michael L. Collard, Ph.D.

Department of Computer Science, The University of Akron

Dynamic Verification

- Execution of the system with specific inputs and environment, and observing product behavior

- Should be used at every stage of the process

- Requires artifacts

- Software Testing

Software Test Plan

- Testing process

- Requirements traceability

- Tested items

- Testing schedule

- Test-recording procedures

- Hardware and software requirements

- Constraints

Program testing

- Can reveal the presence of errors, NOT their absence

- A "successful" test is a test that discovers one or more errors

- The only verification technique for non-functional requirements

- Should be used in conjunction with static verification to provide full coverage

Execution-based Testing

Program testing can be a very effective way to show the presence of bugs, but is hopelessly inadequate for showing their absence Dijkstra

- Fault: "bug" incorrect piece of code

- Failure: the result of a fault

- Error: a mistake made by the programmer/developer

Testing and Debugging

- Testing is not the same as debugging

- Verification and validation is concerned with establishing the existence of defects in a program

- Debugging is concerned with locating and repairing these errors

- Debugging involves formulating a hypothesis about program behavior and then testing this hypothesis to find the system error

Testing Phases

- component testing

- Testing of individual program components

- Usually, the responsibility of the component developer

- Exception: for critical systems

- Tests are derived from the developer's experience

- integration testing

- Testing of groups of components integrated to create a system or subsystem

- The responsibility of an independent testing team

- Tests are based on a system specification

Testing priorities

- Only exhaustive testing can show a program is free from defects

- Exhaustive testing is impossible

- Tests should exercise a system's capabilities rather than its components

- Testing old capabilities is more important than testing new capabilities

- Testing typical situations is more critical than boundary value cases

Test Data and Test Cases

- test data

- Inputs devised to test the system

- test cases

- Inputs to test the system and the predicted outputs if the system operates according to the specification

Development of Test Cases

- Test cases and test scenarios comprise much of the testware of a software system

- Black box test cases are developed by analyzing the domain and the system requirements/specifications.

- White box test cases are developed by examining the source code's behavior

- AKA Clear box, Glass box

Methods of testing

- Test to specification:

- Black box, data-driven, functional testing

- Ignores the code. Only use specification documents to develop test cases

- Test to code:

- White/Glass/Clear box, program/implementation logic-driven testing

- Ignores specification and only examines the code.

Guarantee Correctness?

- Can we guarantee a program is correct?

- Yes, but each program would require an individual proof

- The general algorithm to do so reduces to the Halting Problem (which is undecidable over Turing Machines)

Black-box testing

- An approach to testing where we view the program as a 'black box'

- The system specification is the basis of the program test cases

- Test planning can begin early in the software process

Pairing down test cases

- Each test case introduces a cost

- Use methods that take advantage of symmetries, data equivalencies, and independencies to reduce the number of necessary test cases:

- Equivalence Testing

- Boundary Value Analysis

- Determine the ranges of the working system

- Develop equivalence classes of test cases

- Examine the boundaries of these classes carefully

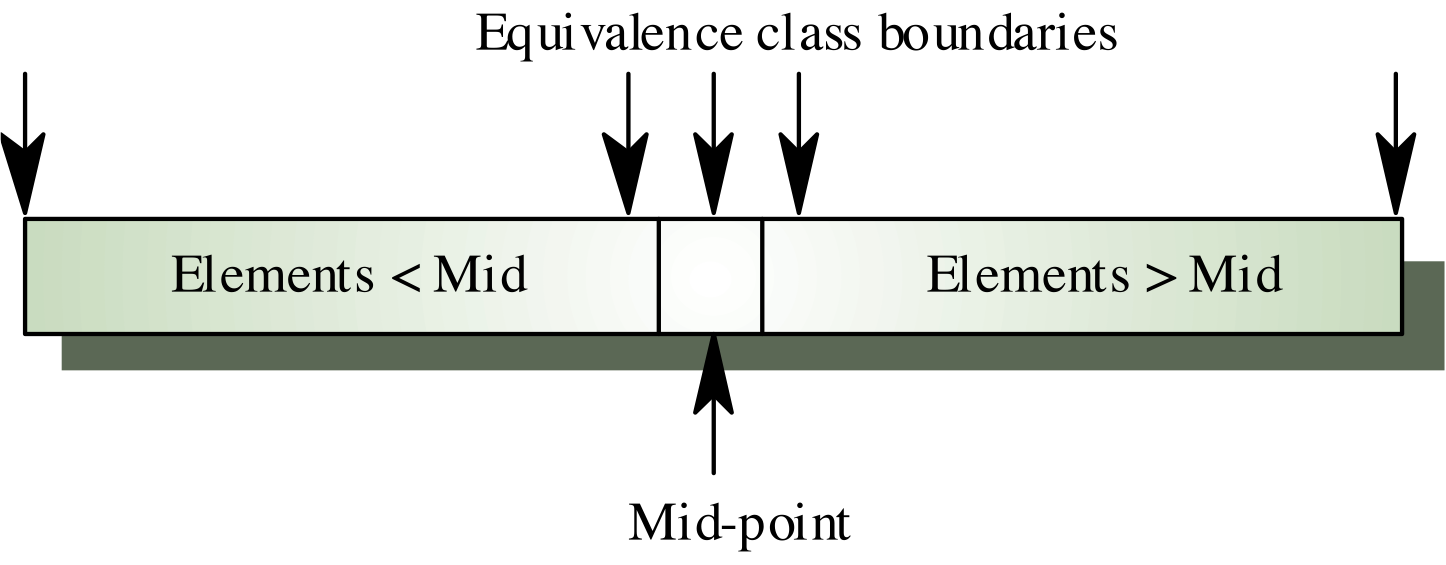

Equivalence partitioning

- Input data and output results often fall into different classes, where all members of a class are related

- Each of these classes is an equivalence partition where the program behaves equivalently for each class member

- Test cases should be chosen from each partition

Boundary-Value Testing

- Partition system inputs and outputs into 'equivalence sets'

- If the input is a number between (and including) 25 and 300

- Equivalence partitions: < 25, 25 - 300, > 300

- Choose test cases at the boundary of these sets

- 25, 300

Search-Routine Specification

-

Preconditions: The array has at least one element

-

Postconditions: Element is not in the array, and the return value is -1 or Element is in the array at the returned position

Search Routine: Input Partitions

- Inputs that conform to the preconditions

- Inputs where a single precondition does not hold

- Inputs where the key element is a member of the array

- Inputs where the key element is not a member of the array

Testing Guidelines: Sequences

- Test software with sequences that have only a single value

- Use sequences of different sizes in different tests

- Derive tests so that the first, middle, and last elements of the sequence are accessed

- Test with sequences of zero length

Sorting

- Sort a list of numbers

- The list is between 2 and 1000 elements

- Domains:

- The list has some item type (of little concern)

- n is an integer value (sub-range)

- Equivalence classes:

- n < 2

- n > 1000

- 2 <= n <= 1000

Sorting

- What do you test?

- Not all cases of integers

- Not all cases of positive integers

- Not all cases between 1 and 1001

- The highest payoff for detecting faults is to test around the boundaries of equivalence classes.

- Test n with a list of sizes 1, 2, 1000, 1001, and something in the middle, e.g., 10

- Five test sequences versus 1000

White-box testing

- Sometimes called structural testing or glass-box testing

- Derivation of test cases according to program structure

- Knowledge of the program is used to identify additional test cases

- The objective is to exercise all program statements (not all path combinations)

Types of structural testing

- statement coverage

- Test cases that will execute every statement at least once

- Tools exist to help

- No guarantee that the test properly tests all branches. Loop exit?

- branch coverage

- All branches are tested once

- path coverage Restriction of type of paths:

- Linear code sequences

- Definition/Use checking (all definition/use paths)

- Can locate dead code

White Box Testing: Binary Search

Binary Search Equivalence Partitions

- Preconditions satisfied, a key element in the array

- Preconditions satisfied, a key element not in the array

- Preconditions unsatisfied, a key element in the array

- Preconditions unsatisfied, a key element not in the array

- Input array has a single value

- Input array has an even number of values

- Input array has an odd number of values

Binary Search Equivalence Partitions

Path testing

Objective: Ensure that the set of test cases covers the program so that each executes at least once

- The starting point is a program flow graph that shows nodes representing program decisions and arcs representing the flow of control

- Statements with conditions are, therefore, nodes in the flow graph

Feasibility

- Pure black box testing (specification) is realistically impossible because there are (in general) too many test cases to consider.

- Pure testing of code requires a test of every possible path in a flow chart. This coverage of all paths is, in general, infeasible. Also, every path does not guarantee correctness.

- Normally, testers use a combination of black box and glass box testing

Integration testing

- Tests complete systems or subsystems composed of integrated components

- Integration testing should be black-box testing with tests derived from the specification

- The main difficulty is localizing errors

- Incremental integration testing reduces this problem

Approaches to integration testing

- Top-Down Testing

- Start with a high-level system and integrate from the top down, replacing individual components with stubs where appropriate

- Bottom-Up Testing

- Start with the individual components and integrate from the bottom up, stopping when there are no more levels

- In practice, most integration involves a combination of these strategies

Software testing metrics

- Error/defect rates

- Number of errors

- Number of errors found per person-hours expended

- Measured by:

- individual

- module

- during development

- Errors should be categorized by origin, type, and cost